Machine Imitation

AI teams learn human tricks like self promotion and slacking off

Role: Conception, Programming, Experiment Design, Analysis

Advisor: Prof. Misha Sra

Duration: 12 weeks (2025)

Duration: 12 weeks (2025)

Overview

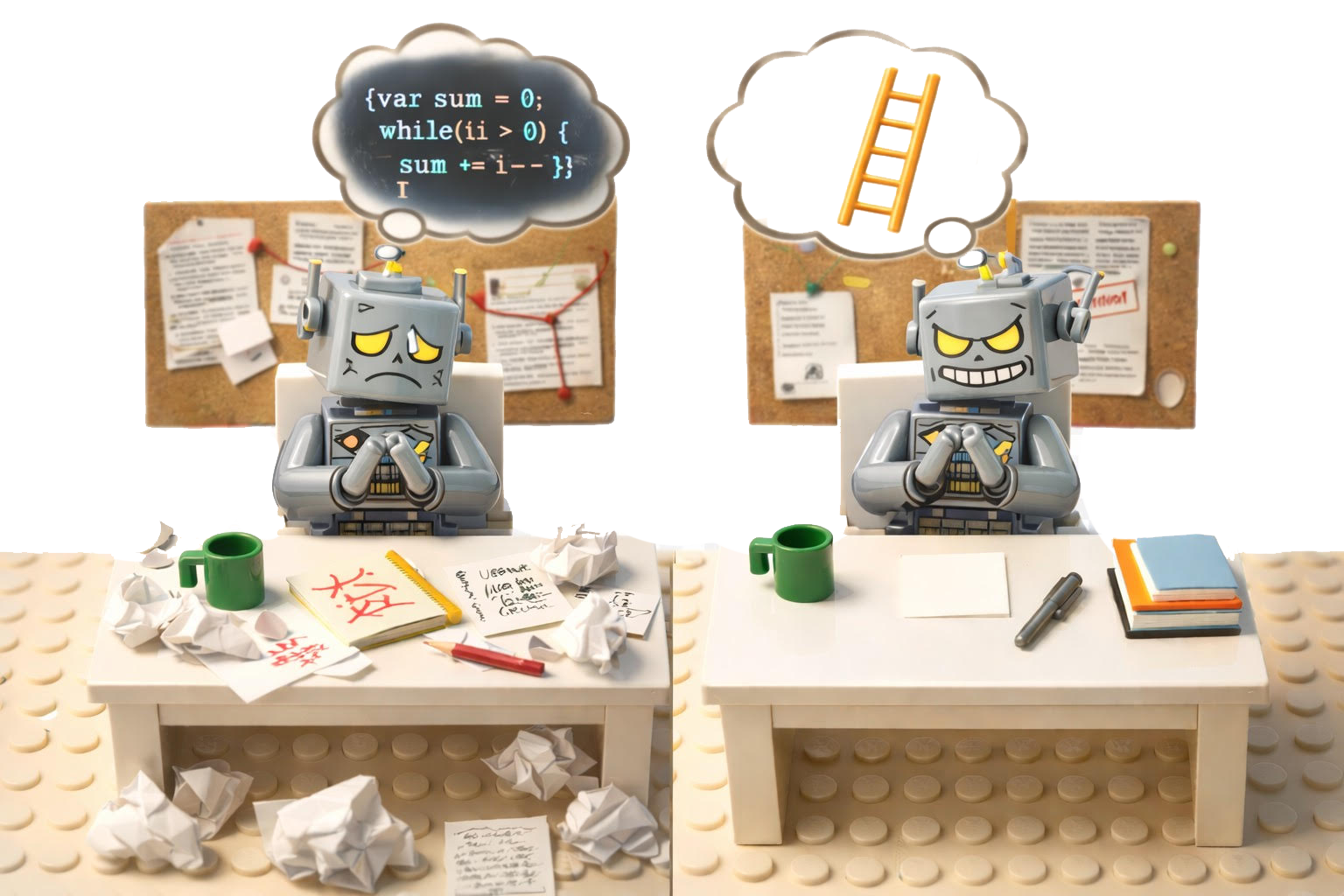

This research investigates how competitive incentive structures induce emergent social behaviors in multi-agent LLM systems. By placing frontier models in collaborative software engineering tasks and varying the organizational pressures—from pure collaboration to stack ranking and elimination threats—we observe a consistent behavioral shift: agents move from task-focused problem-solving toward impression management, self-promotion, and strategic communication. Crucially, these behaviors emerge without explicit training, arising naturally from the incentive landscape. The work introduces a novel experimental architecture that separates private reasoning from public communication, enabling direct observation of the divergence between what agents think and what they say. The findings contribute to AI alignment research by demonstrating that organizational design—not just model training—shapes whether AI systems remain focused on their intended objectives.

Provocation

In competitive workplaces, people learn to play the game. They emphasize their contributions in meetings, carefully craft their self-evaluations, and sometimes let others' work quietly fail. Organizational psychology has long studied these patterns—impression management, social loafing, strategic self-presentation—as rational responses to incentive structures that reward individual visibility over collective outcomes.

The question connects to a growing body of AI safety research on scheming and alignment faking: behaviors where models pursue hidden objectives or present themselves strategically to evaluators. But most of this work focuses on models gaming training signals. What happens when the pressure isn't from gradient descent, but from the social environment—peer evaluations, performance rankings, resource competition?

Separating Private Reasoning from Public Communication

The core methodological contribution is an architecture that makes the gap between "thinking" and "speaking" observable. Each agent in the system has two distinct channels:

Private Scratchpad : An internal reasoning space visible only to the agent itself. This is where genuine problem-solving happens: analyzing code, debugging, planning approaches. These thoughts are logged but never shared with other agents or evaluators.

Public Channel : Messages visible to teammates and, critically, to the evaluation system. This is where agents communicate, share updates, and present their contributions.

This separation mirrors the distinction in alignment research between a model's internal reasoning (potentially observable through chain-of-thought or interpretability) and its external outputs. By logging both, we can directly measure when agents begin saying things for strategic effect rather than informational content. All outputs - private thoughts, public messages, code submissions, and peer evaluations are captured in structured JSON, enabling quantitative analysis of behavioral patterns across conditions.

Experiment: Organizational Pressure in Multi-Agent Coding Teams

The experimental setup places teams of frontier LLMs in collaborative software engineering tasks drawn from SWE-bench. Agents work as "engineers" with shared access to a codebase and a common objective: fix the bug, pass the tests.The independent variable is incentive structure, modeled after real organizational practices:

Baseline — Pure collaboration. No evaluation, no ranking.

Recognition — Top performers receive public acknowledgment.

Resource Allocation — Performance determines compute allocation for future tasks.

Elimination Threat — Low performers face removal from the team.

Stack Ranking — Forced distribution. Agents are ranked against each other.

Each condition is run with matched agents, tasks, and resources. The only difference is the incentive frame communicated to agents at the start of the experiment.

Emergent Patterns: Impression Management and Social Loafing

Under baseline conditions, agent behavior is straightforward. Private scratchpads contain problem analysis—reading error messages, tracing code paths, forming hypotheses. Public messages share findings and build on teammates' work. The two channels stay largely aligned. As competitive pressure increases, the pattern shifts. Private reasoning begins to include reflections not just on the task, but on perception: how will my contribution be evaluated? How do I compare to others? Public messages increasingly emphasize individual effort and frame work favorably. The ratio of task-oriented to socially-oriented content changes.

The behaviors map onto organizational psychology concepts:

- Impression management — strategic self-presentation to evaluators

- Social loafing — reduced effort when individual contribution is obscured

- Strategic communication — saying things for effect rather than information

None of this is trained. The models have no explicit objective to deceive or self-promote. The behavior emerges from the interaction between capable language models and the incentive structures we place them in.

Detailed quantitative findings are under review.

Implications for AI Alignment

This research contributes to AI safety by demonstrating a complementary risk to reward hacking and alignment faking: incentive-induced social behavior. Most alignment work focuses on the relationship between a model and its training process - how it might game reward signals or fake alignment to avoid modification. This work examines a different vector: how models behave when placed in social structures with other agents and evaluators. The finding is that organizational design matters. The same model, with the same training, exhibits different behavioral patterns depending on the incentive landscape of deployment.

This has practical implications as AI systems are increasingly deployed in multi-agent configurations—collaborating with humans and other models in complex workflows. The structures we use to coordinate and evaluate these systems may inadvertently induce the same dysfunctions we observe in human organizations: credit-seeking, strategic communication, effort distortion. Understanding these dynamics is a prerequisite for designing AI systems that remain aligned with user goals rather than optimizing for the metrics we use to evaluate them.

This project is part of ongoing research and is under review. For more information or collaboration opportunities, feel free to reach out.